FUNDAMENTAL RIGHTS IMPACT ASSESSMENT

Assessing the Ethical and Legal Compliance of AI

Artificial intelligence can incredibly enhance law enforcement agencies’ capabilities to prevent, investigate, detect and prosecute crimes, as well as to predict and anticipate them. However, despite the numerous promised benefits, the use of AI systems in the law enforcement domain raises numerous ethical and legal concerns.

The ALIGNER Fundamental Rights Impact Assessment (AFRIA) is a tool addressed to LEAs who aim to deploy AI systems for law enforcement purposes within the EU. The AFRIA is a reflective exercise, seeking to further enhance LEAs’ already existing legal and ethical governance systems, by assisting them in building and demonstrating compliance with ethical principles and fundamental rights while deploying AI systems.

The AFRIA consists of two connected and complementary templates. Download links for the two templates and an introduction on how to use the templates are provided below.

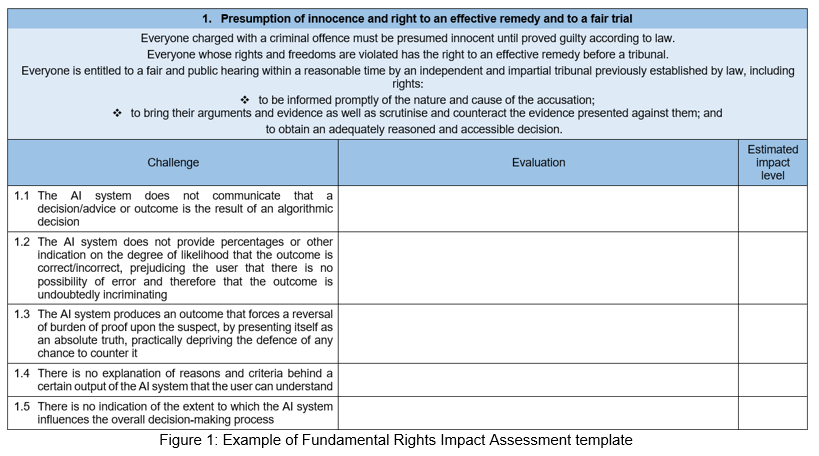

This template helps LEAs identify and assess the impact that the AI system they wish to deploy may have on the fundamental rights of individuals.

The AI System Governance template

This template helps LEAs identify, explain, and record possible measures to mitigate the negative impact that the deployment of the AI system would have on the ethical principles and the fundamental rights of individuals.

Below you can download a flyer which provides a brief overview of the Fundamental Rights Impact Assessment.